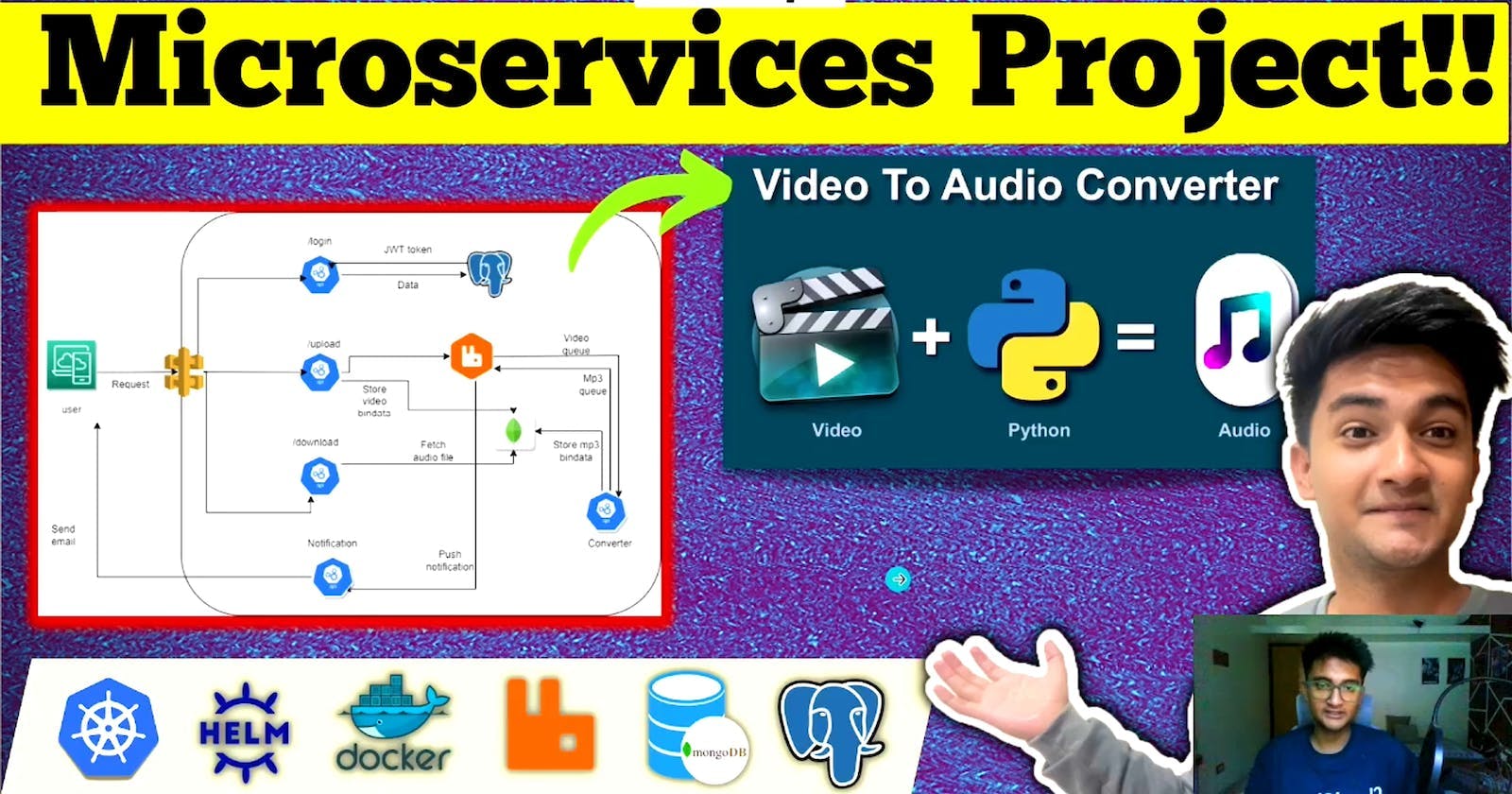

In today’s fast-paced world of software development, deploying scalable and reliable microservice applications is essential. Microservices allow for the efficient management of various components of a complex application, ensuring flexibility and robustness. To help you navigate this journey, we’ve prepared a comprehensive step-by-step guide on deploying a Python-based microservice application on AWS Elastic Kubernetes Service (EKS).

This application is composed of four pivotal microservices: the auth-server, converter-module, database-server (leveraging PostgreSQL and MongoDB), and the notification-server. By the end of this guide, you'll have a fully operational microservices-based application up and running on AWS EKS.

All credits To Nasiullha Chaudhari

YouTube video: https://youtu.be/jHlRqQzqB_Y?si=oVjnBLWD5JEs_dFW

Prerequisites:

Before we dive into the deployment process, make sure you’ve completed the following prerequisites:

Create an AWS Account: If you’re new to AWS, it’s essential to have an AWS account to access the services and resources required for this deployment. Don’t worry; we’ll guide you through the process.

Install Helm: Helm, a Kubernetes package manager, simplifies the deployment of applications on Kubernetes clusters. We’ll walk you through the installation steps.

Python Installation: Ensure that Python is installed on your system. You can download it directly from the official Python website if needed.

AWS CLI: The AWS Command Line Interface (CLI) is your command-line interface to AWS services. We’ll help you set it up for seamless interaction with AWS resources.

kubectl Installation: Kubectl is the command-line tool for interacting with Kubernetes clusters. Installing it is crucial for managing your EKS cluster.

Database Setup: As our microservice application relies on both PostgreSQL and MongoDB, ensure that these databases are set up and ready to go.

With these prerequisites in place, you’ll be well-prepared to embark on your journey to deploy a Python-based microservice application on AWS EKS. This guide will take you through each step, providing clear instructions and best practices to ensure your success.

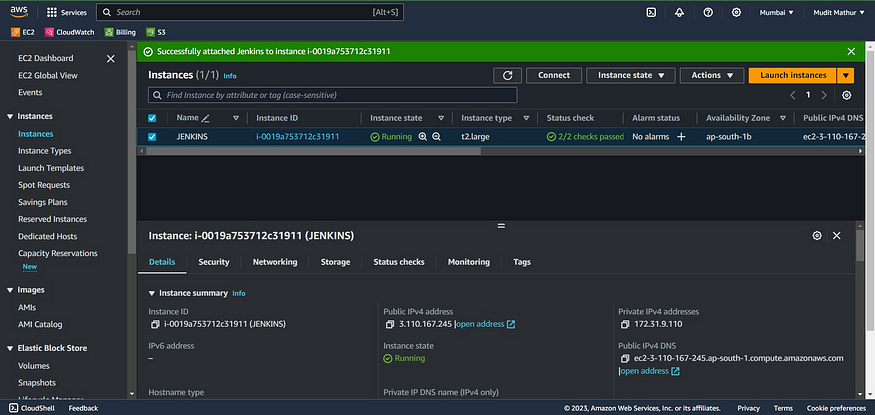

1. 🚀 Launch an EC2 Instance

To launch an AWS EC2 instance with Ubuntu 22.04 using the AWS Management Console, sign in to your AWS account, access the EC2 dashboard, and click “Launch Instances.” In “Step 1,” select “Ubuntu 22.04” as the AMI, and in “Step 2,” choose “t2.medium” as the instance type. Configure the instance details, storage, tags, and security group settings according to your requirements. Review the settings, create or select a key pair for secure access, and launch the instance. Once launched, you can connect to it via SSH using the associated key pair.

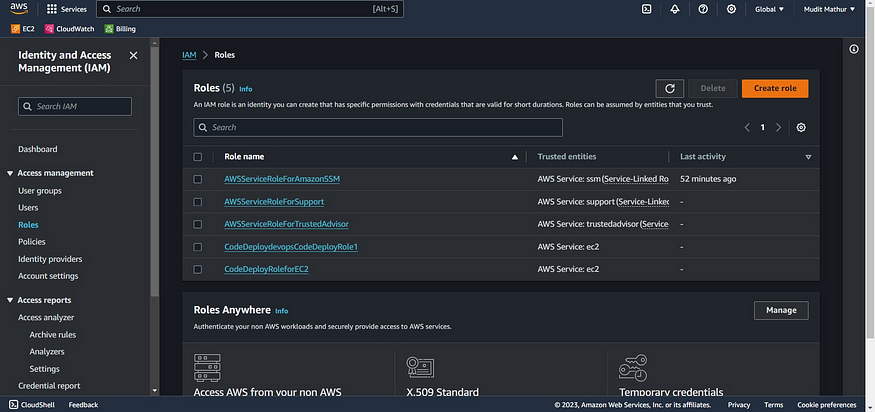

2. 🤖 Create an IAM Role

Navigate to AWS CONSOLE

Click the “Search” field.

Type “IAM enter”

Click “Roles”

Click “Create role”

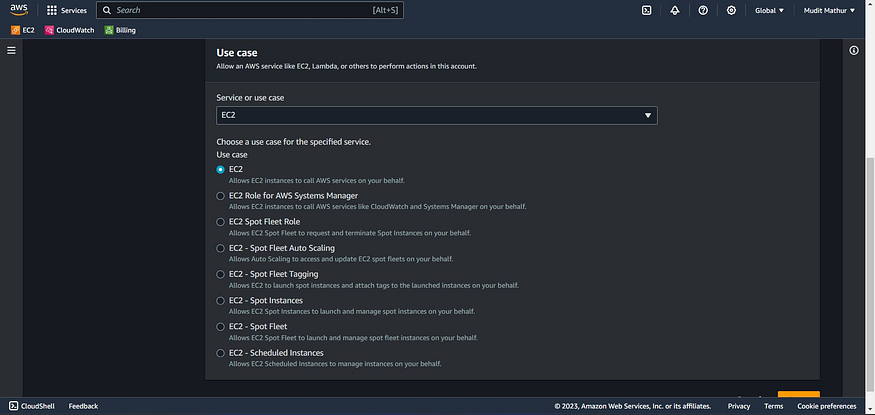

Click “AWS service”

Click “Choose a service or use case”

Click “EC2”

Click “Next”

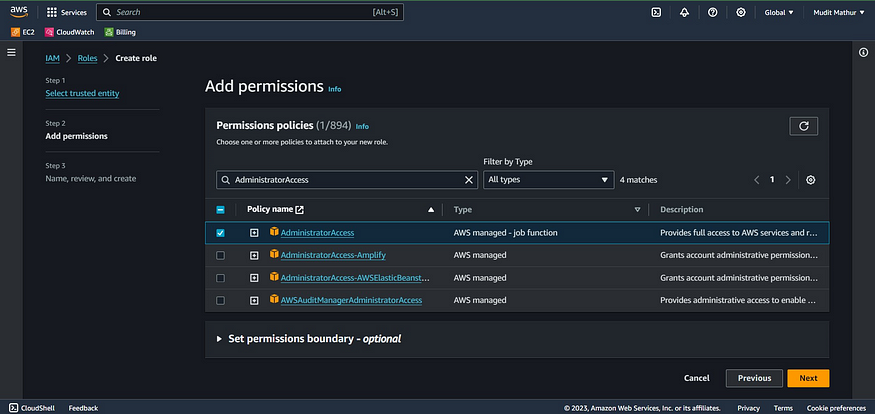

Click the “Search” field.

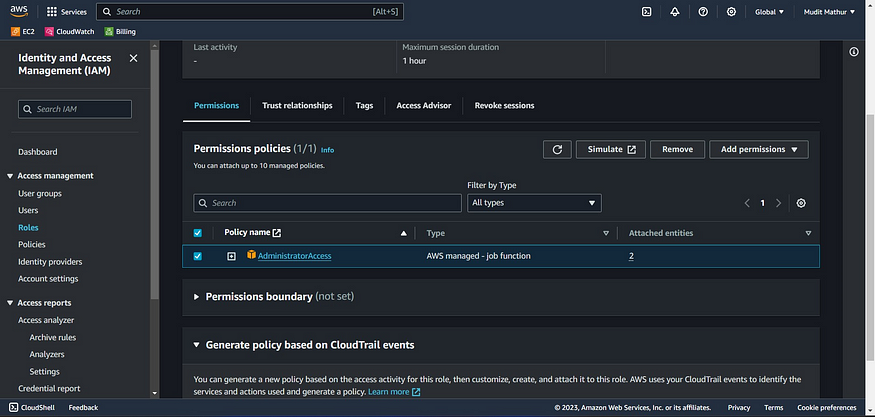

Add permissions policies

Administrator Access

Click Next

Click the “Role name” field, Add the name

Click “Create role” (JUST SAMPLE IMAGE BELOW ONE)

Click “EC2”

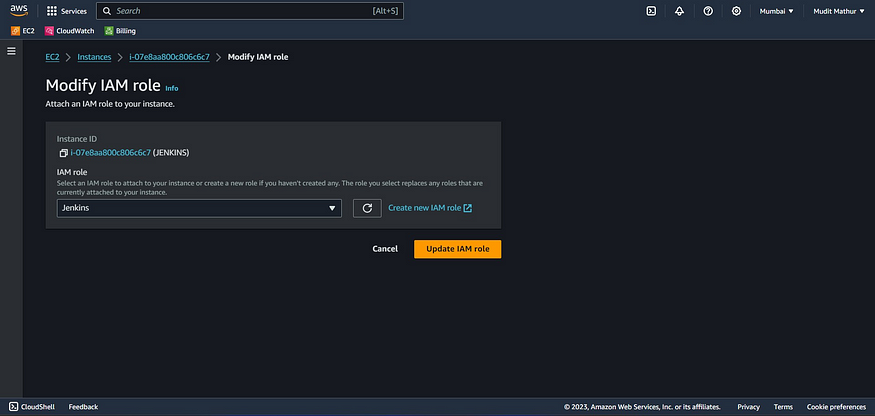

Go to the instance and add this role to the Ec2 instance.

Select instance → Actions → Security → Modify IAM role

Add a newly created Role and click on Update IAM role.

Now connect to your Ec2 instance using Putty or Mobaxtrem/PowerShell Terminal.

Clone this repo

https://github.com/mudit097/microservices-python-app.git

Change into the microservices directory

cd microservices-python-app/microservices-python-app-main

Provide the executable permissions to the script.sh file

#install docker

sudo apt-get update

sudo apt-get install docker.io -y

sudo usermod -aG docker ubuntu

newgrp docker

sudo chmod 777 /var/run/docker.sock

# Install Terraform

sudo apt install wget -y

wget -O- https://apt.releases.hashicorp.com/gpg | sudo gpg --dearmor -o /usr/share/keyrings/hashicorp-archive-keyring.gpg

echo "deb [signed-by=/usr/share/keyrings/hashicorp-archive-keyring.gpg] https://apt.releases.hashicorp.com $(lsb_release -cs) main" | sudo tee /etc/apt/sources.list.d/hashicorp.list

sudo apt update && sudo apt install terraform

# Install kubectl

sudo apt update

sudo apt install curl -y

curl -LO https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl

sudo install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl

kubectl version --client

# Install AWS CLI

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

sudo apt-get install unzip -y

unzip awscliv2.zip

sudo ./aws/install

#install helm

curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3

chmod 700 get_helm.sh

./get_helm.sh

#install python

sudo apt update

sudo apt install python3 -y

#install postgresql

#!/bin/bash

# Update the package list and install PostgreSQL

sudo apt update

sudo apt install -y postgresql postgresql-contrib

# Start the PostgreSQL service and enable it on boot

sudo systemctl start postgresql

sudo systemctl enable postgresql

#mongoDB

sudo apt update

sudo apt install wget curl gnupg2 software-properties-common apt-transport-https ca-certificates lsb-release -y

curl -fsSL https://www.mongodb.org/static/pgp/server-6.0.asc|sudo gpg --dearmor -o /etc/apt/trusted.gpg.d/mongodb-6.gpg

echo "deb [ arch=amd64,arm64 ] https://repo.mongodb.org/apt/ubuntu $(lsb_release -cs)/mongodb-org/6.0 multiverse" | sudo tee /etc/apt/sources.list.d/mongodb-org-6.0.list

sudo apt update

sudo apt install mongodb-org -y

sudo systemctl enable --now mongod

sudo systemctl status mongod

mongod --version

sudo chmod +x script.sh

#run this script

./script.sh

This script does the following:

Installs Terraform.

Installs kubectl.

Installs AWS CLI.

Installs Helm.

Installs Python.

Installs PostgreSQL.

Installs MongoDB.

It sets up your system with the necessary tools and services for your environment.

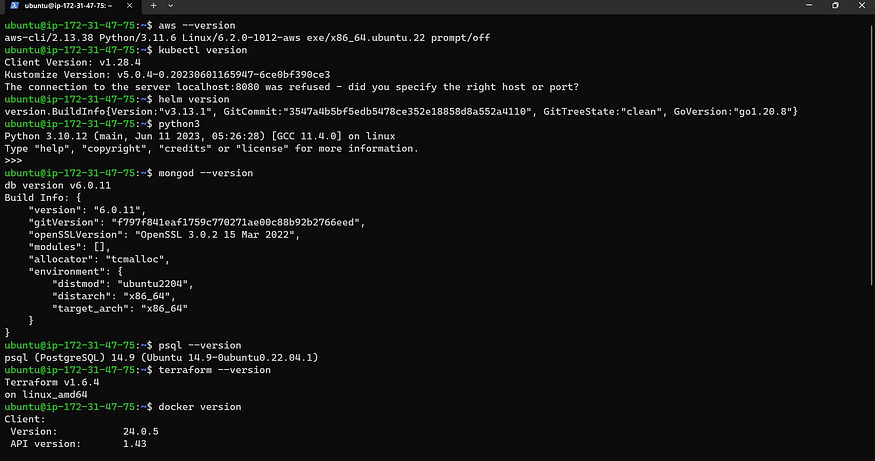

Check versions once the script completes running.

# Check Versions

aws --version

kubectl version

helm version

python3

mongod --version

psql --version

terraform --version

docker version

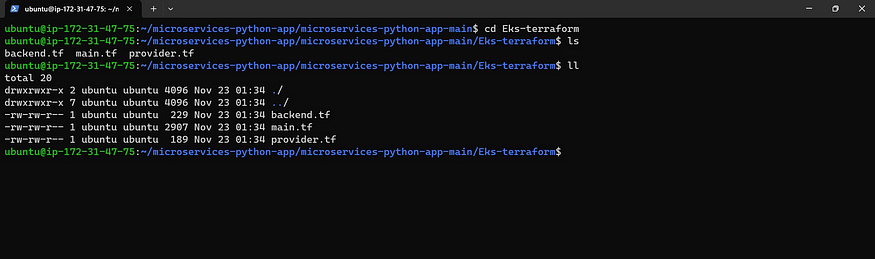

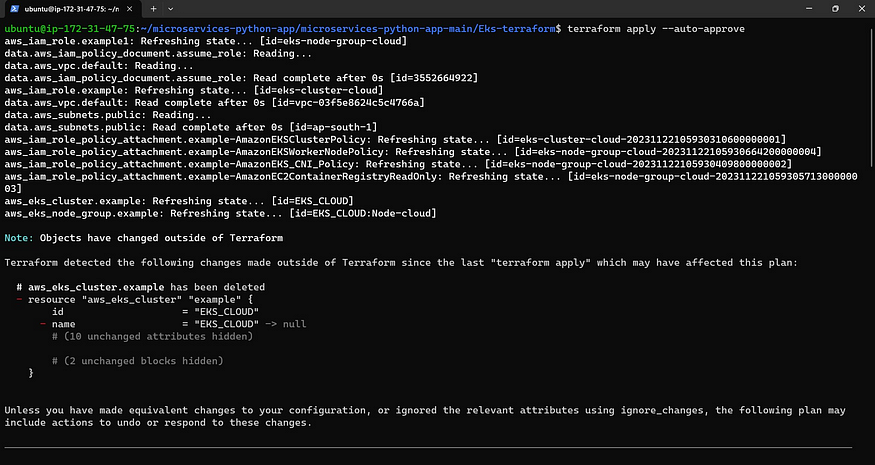

Now go inside Eks-terraform to provison Eks cluster with terraform files

cd Eks-terraform

Now you can see terraform files and make sure to change your region and S3 bucket name in the backend file.

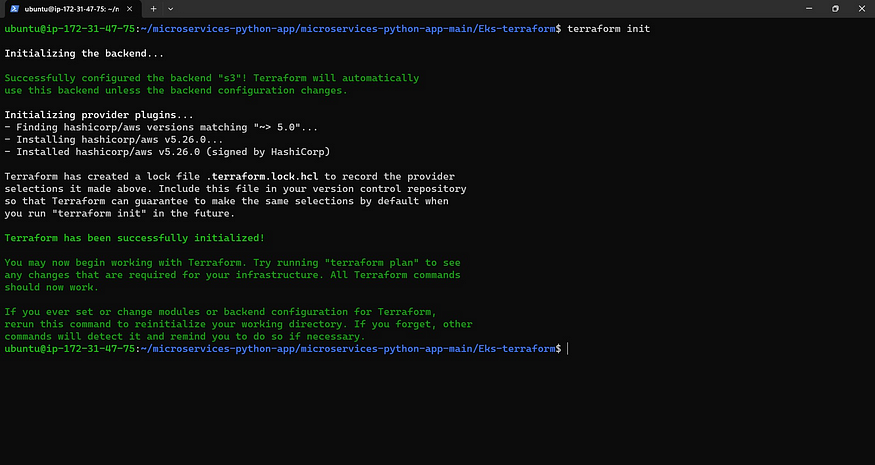

Now initialize

terraform init

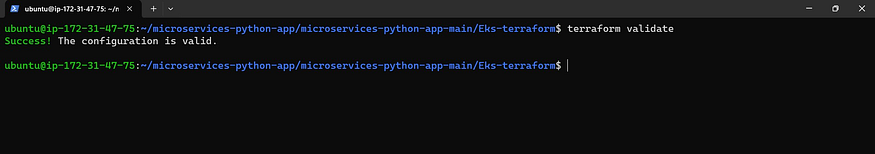

Validate the code

terraform validate

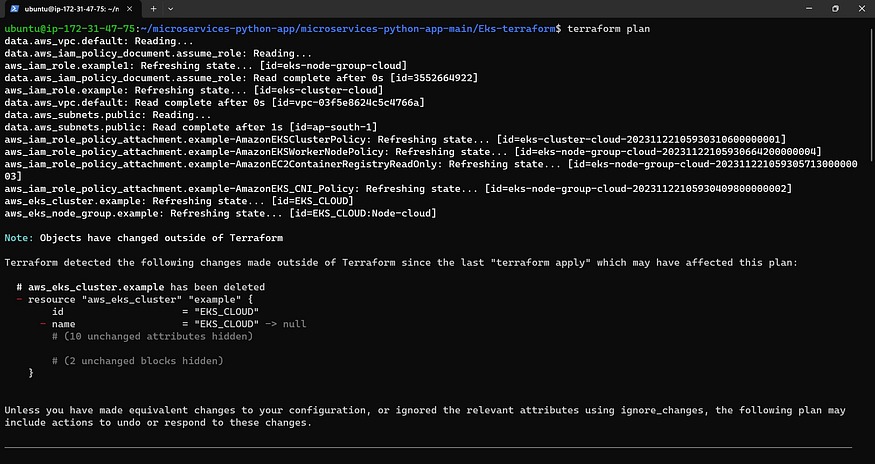

Let’s see plan

terraform plan

Let’s apply to provision

terraform apply --auto-approve

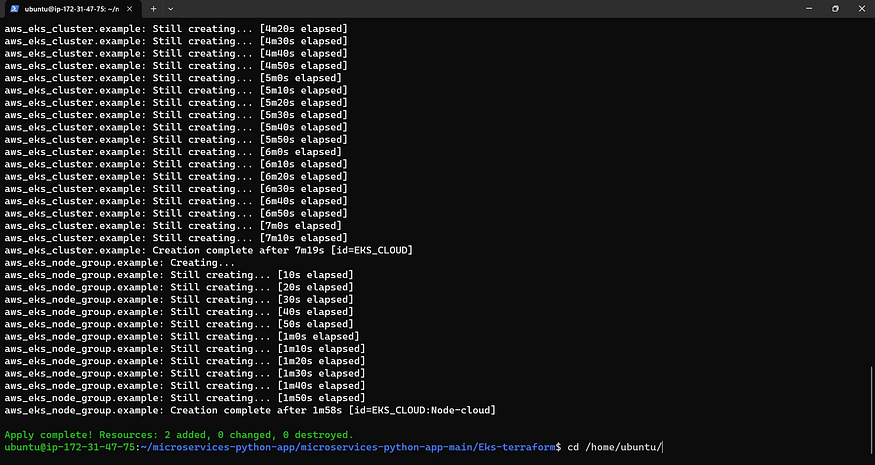

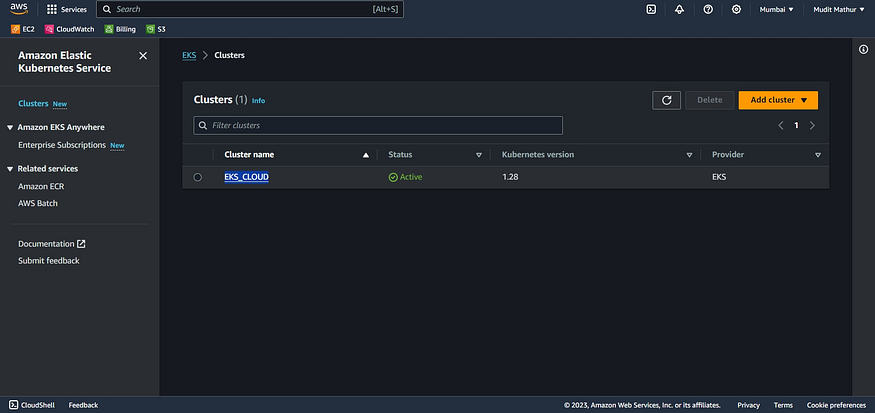

It will take 10 minutes to provision

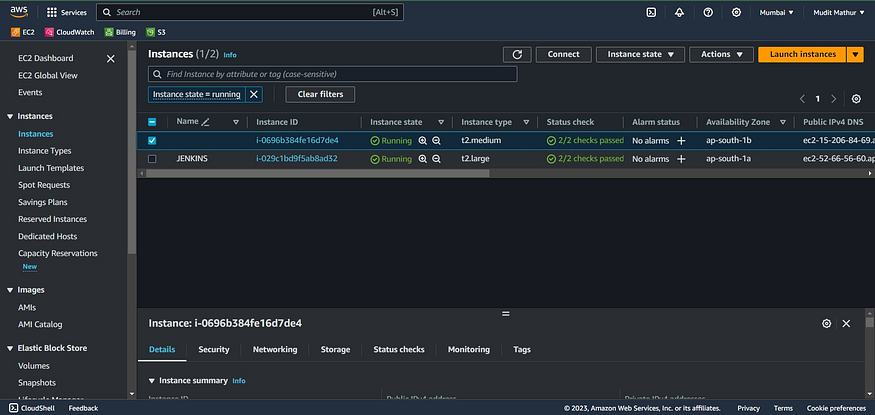

It will create a cluster and node group with one ec2 instance

Node group ec2 instance

Now come outside of the Eks-terraform directory.

cd ..

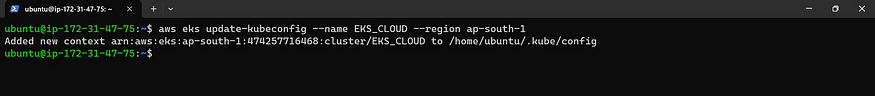

Now update the Kubernetes configuration

aws eks update-kubeconfig --name <cluster-name> --region <region>

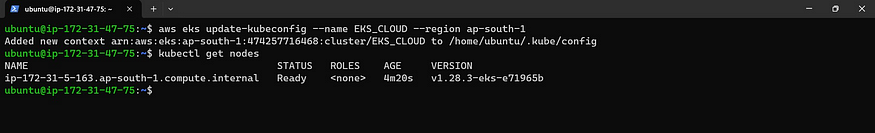

Let’s see the nodes

kubectl get nodes

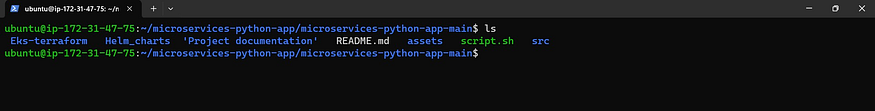

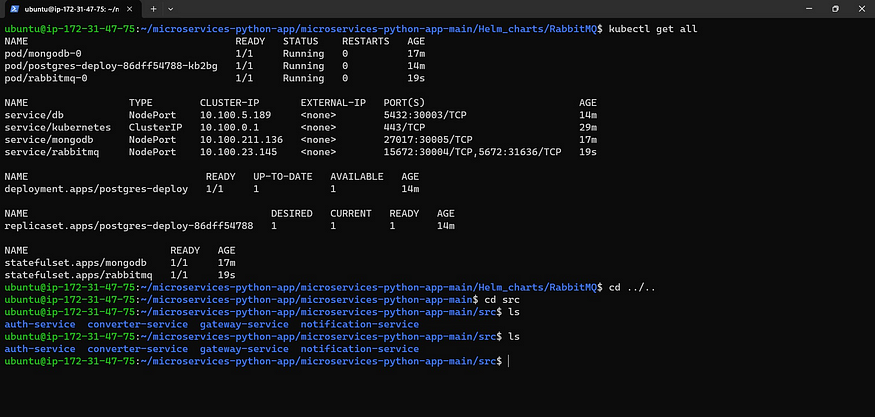

If you provide ls, you will see Helm_charts

ls -a

Let’s go inside the Helm_charts

cd Helm_charts

ls

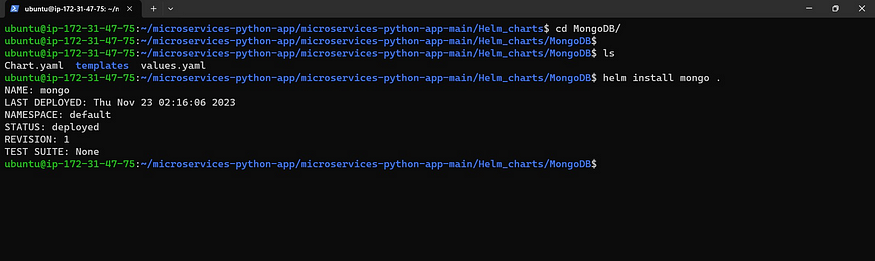

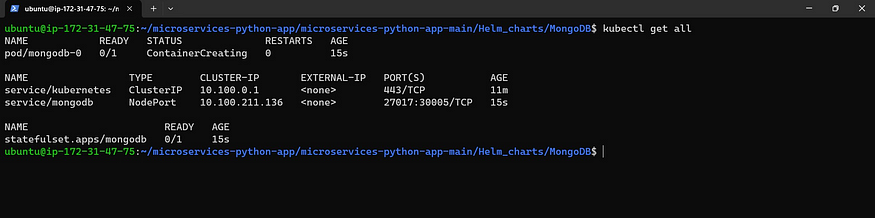

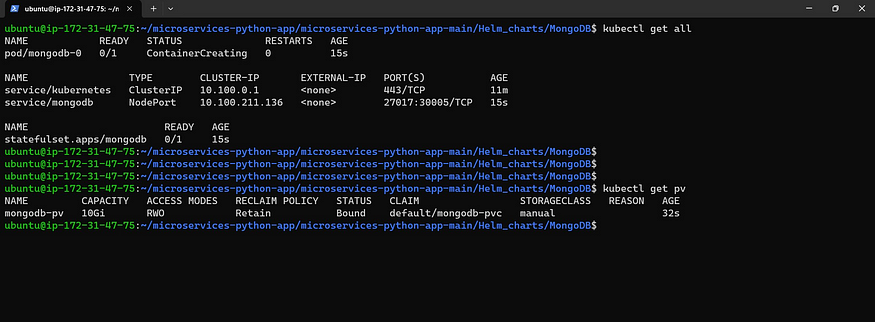

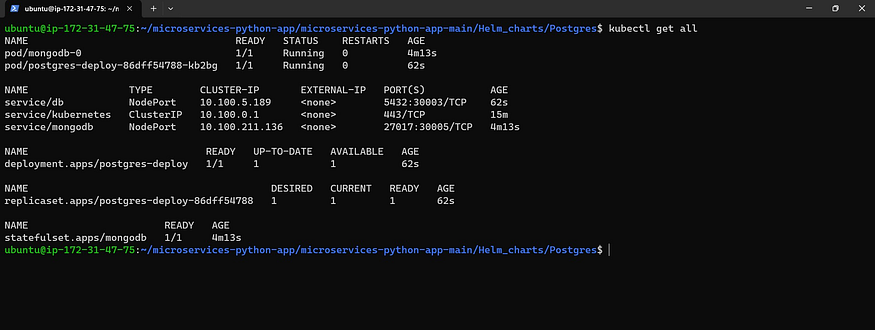

Now go inside MongoDB and apply the k8s files

cd MongoDB

ls

helm install mongo . #to apply all deployment and service files

Now provide the command

kubectl get all

Now see Persistent volume

kubectl get pv

Now come back

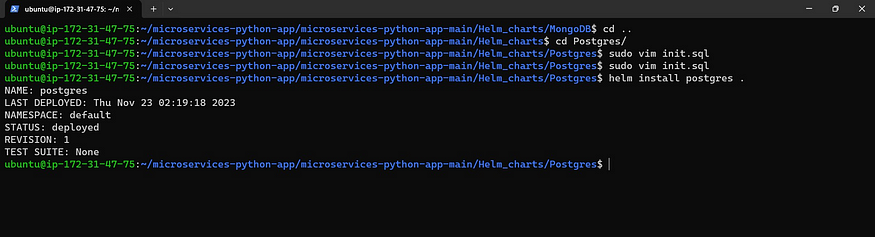

cd ..

cd Postgres

sudo vi init.sql

Change your mail here

Apply the Kubernetes files using Helm

helm install postgres .

Let’s see the pods and deployments

kubectl get all

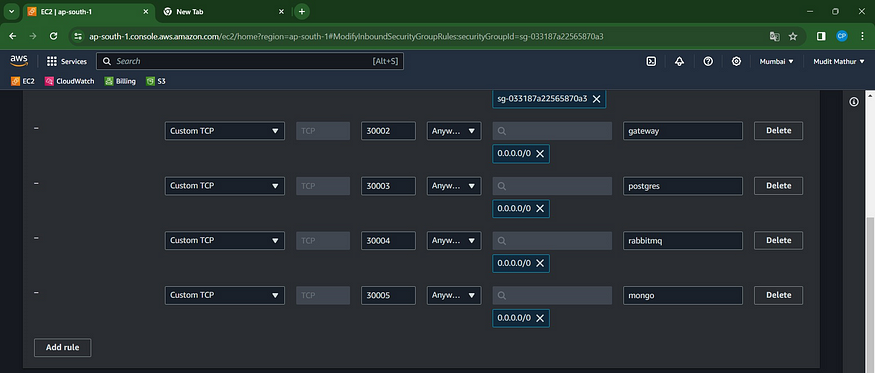

Let’s add some ports for our node group ec2 instance

Go to the Node group ec2 instance and select the security group of the node group

Add these rules for it

Now copy the public IP of the Node group ec2 instance

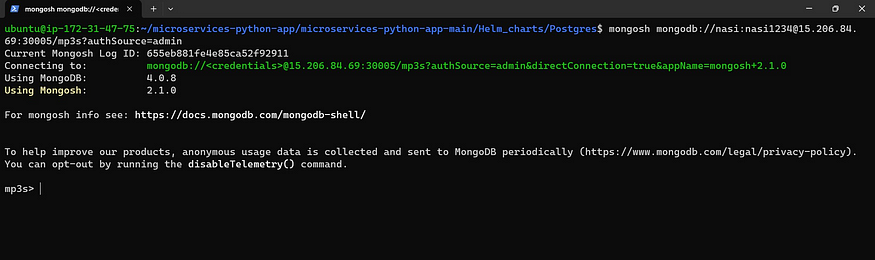

Go back to Putty and paste the following command with updated details

mongosh mongodb://<username>:<pwd>@<nodeip>:30005/mp3s?authSource=admin

#username use nasi

#pwd nasi1234 #if you want to update them go to mongo secrets.yml file and update

#nodeip #use your node ec2 instance ip

Now you are connected to MongoDB. come out of it.

exit

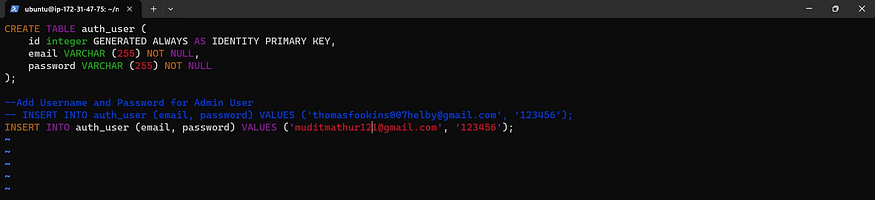

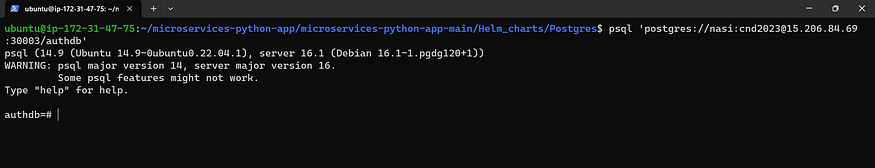

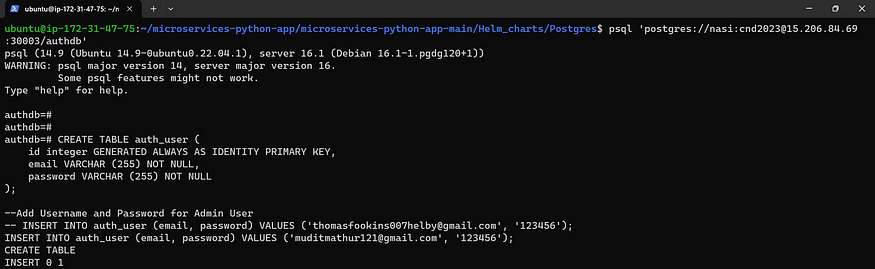

Connect to the Postgres database and copy all the queries from the “init.sql” file.

psql 'postgres://<username>:<pwd>@<nodeip>:30003/authdb'

#username nasi

#pwd cnd2023

#nodeip node group ec2 public ip

Now it’s connected to psql

Now add the init.sql file

CREATE TABLE auth_user (

id integer GENERATED ALWAYS AS IDENTITY PRIMARY KEY,

email VARCHAR (255) NOT NULL,

password VARCHAR (255) NOT NULL

);

--Add Username and Password for Admin User

-- INSERT INTO auth_user (email, password) VALUES ('thomasfookins007helby@gmail.com', '123456');

INSERT INTO auth_user (email, password) VALUES ('<User your mail>', '123456');

Use your mail for values

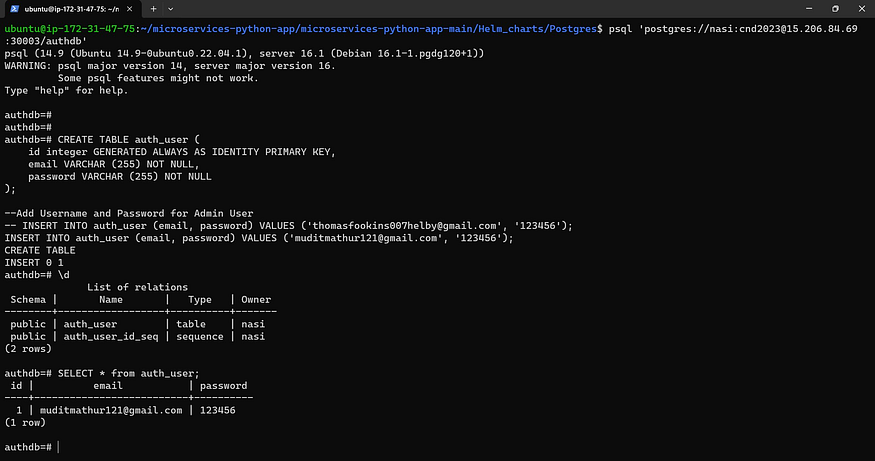

Now provide \d to see data tables

\d

Now provide the below command to see the authentication user

SELECT * from auth_user;

You will get your email and password.

Now provide an exit to come out of psql

exit

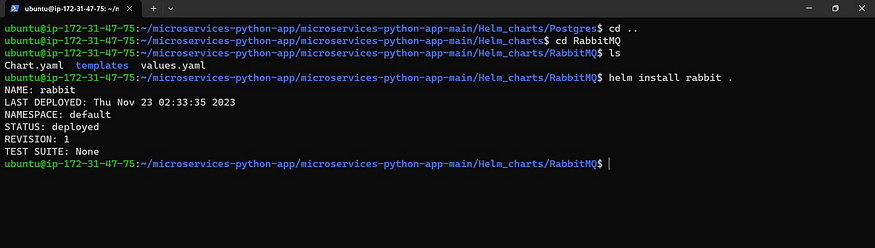

Now change the directory into RabbitMq to deploy

#if you are at Postgres directory use

cd ..

cd RabbitMQ

ls

helm install rabbit .

Now see the deployments and pods

kubectl get all

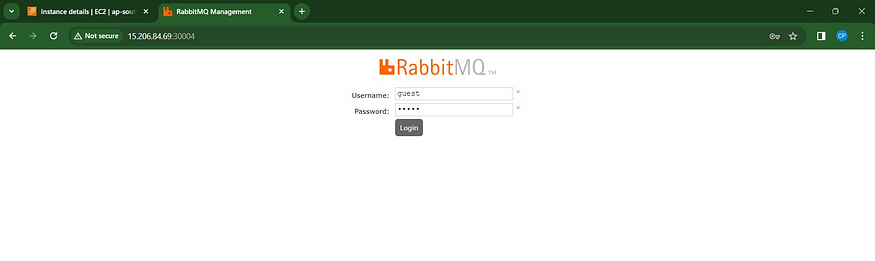

Now copy the public IP of the node group Ec2 instance

<Node-ec2-public-ip:30004>

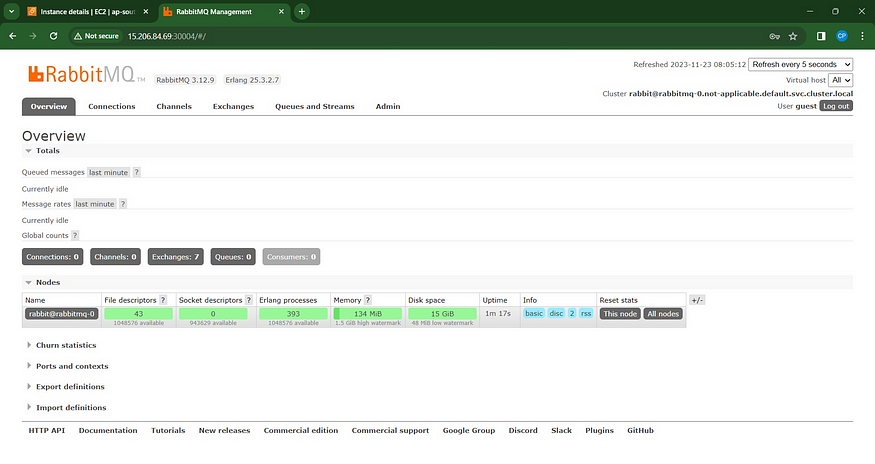

You will get this page Just login

username is guest

password is guest

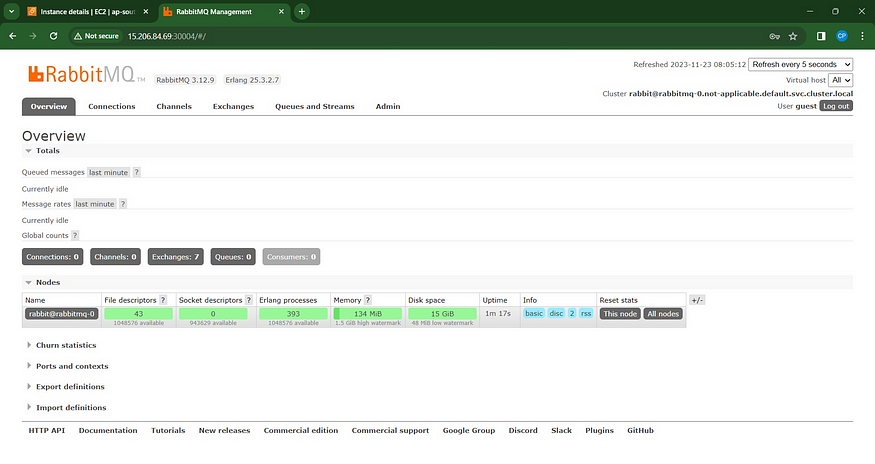

After login, you will see this page

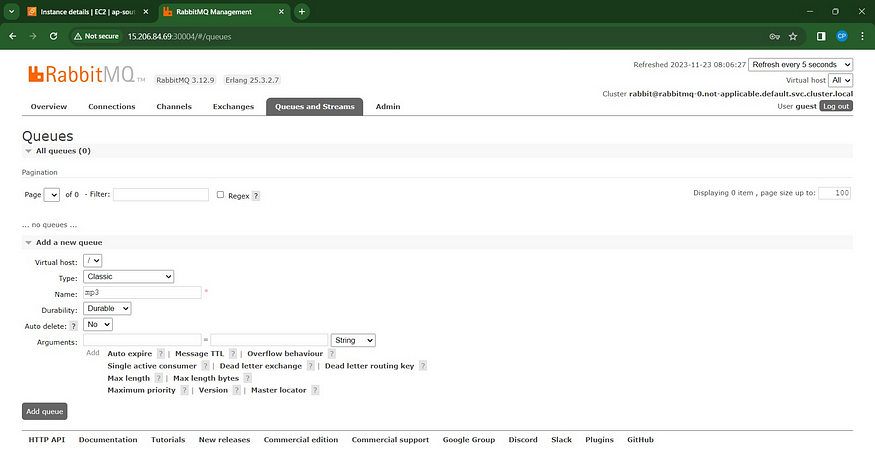

Now click on Queues

Click on Add a new Queue

Type as classic

Name mp3 → Add queue.

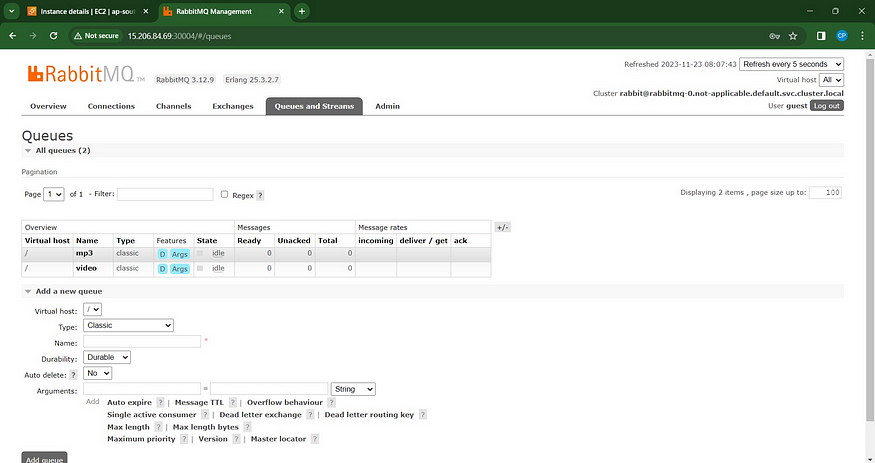

Now click again Add a new queue for Video

Type as classic → Name as video → Add queue

You will see queues like this.

Now go back to Putty

Come out of helm charts

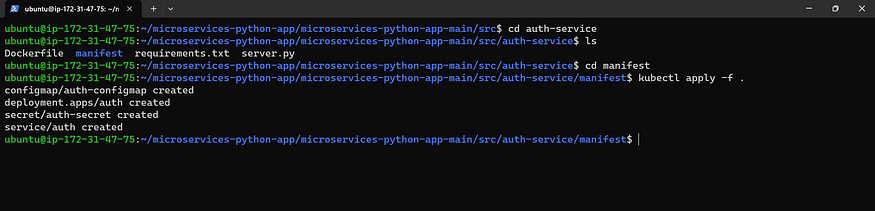

3. 🌐 Microservices

cd ../..

#if you are at microservices-python-app-main

cd src

ls #these are the microservices

Now Go inside each microservice, and you will find a Dockerfile

you can create your docker images for each microservice and use it in the deployment file also.

First Go inside auth-service

cd auth-service

ls

cd manifest #directory

kubectl apply -f .

Now let’s see whether it created pods or not

kubectl get all

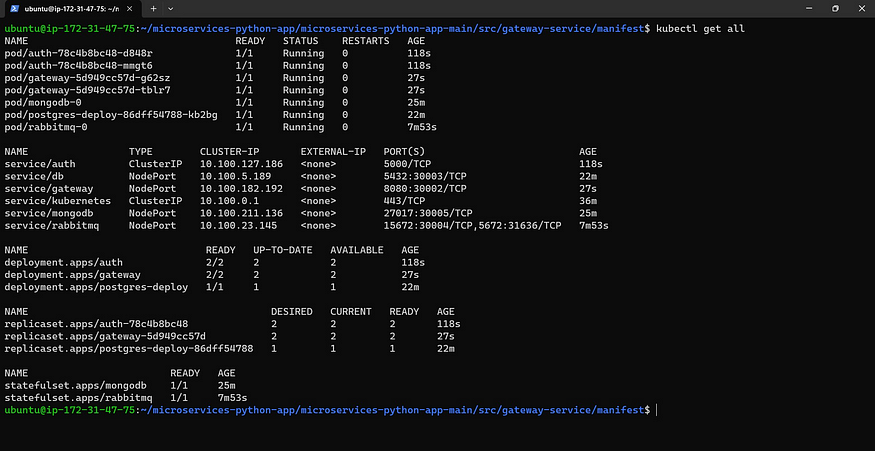

Now come back to another Microservice and do the same process

cd ../..

cd gateway-service

cd manifest

kubectl apply -f .

Check

kubectl get all

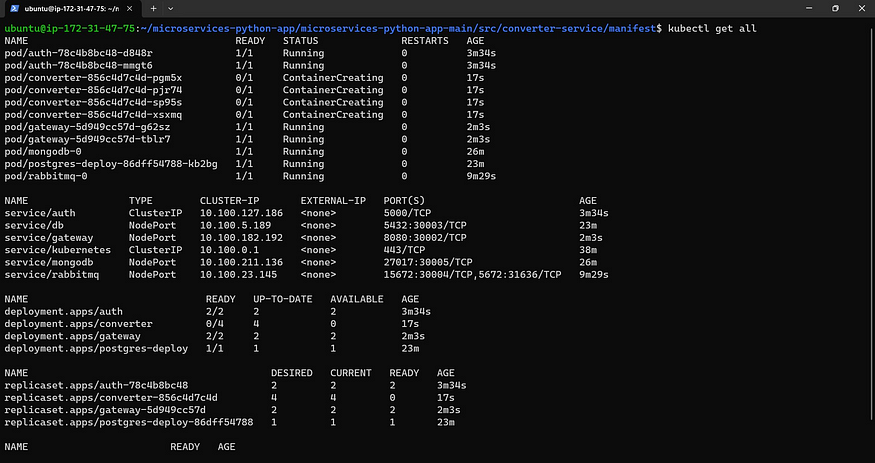

Now come back to another microservice and do the same process

cd ../..

cd converter-service

cd manifest

kubectl apply -f .

Check for deployments and service

kubectl get all

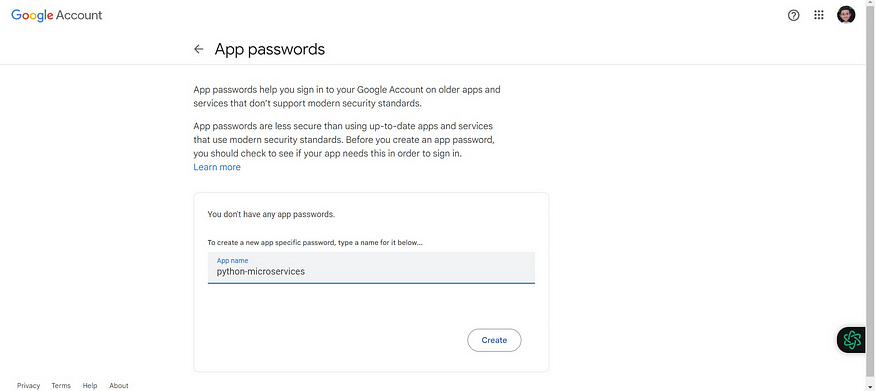

4. 📧 GMAIL PASSWORD

Now let’s create a Password for Gmail to get Notifications

Open your Gmail account in the browser and click on your profile top right.

Click on Manage your Google account

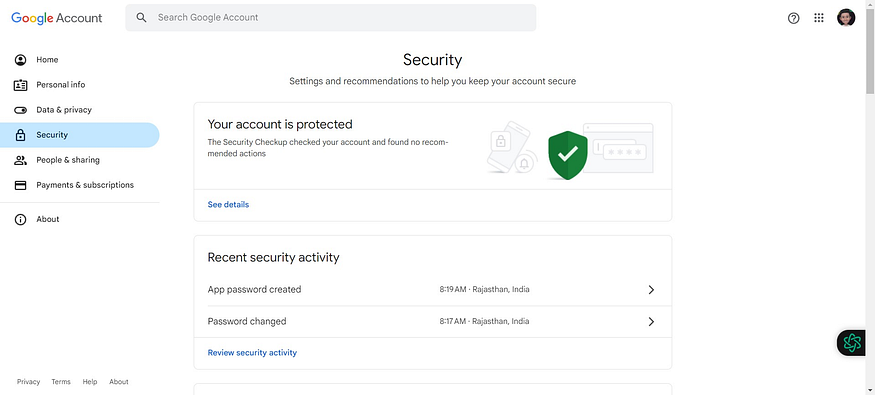

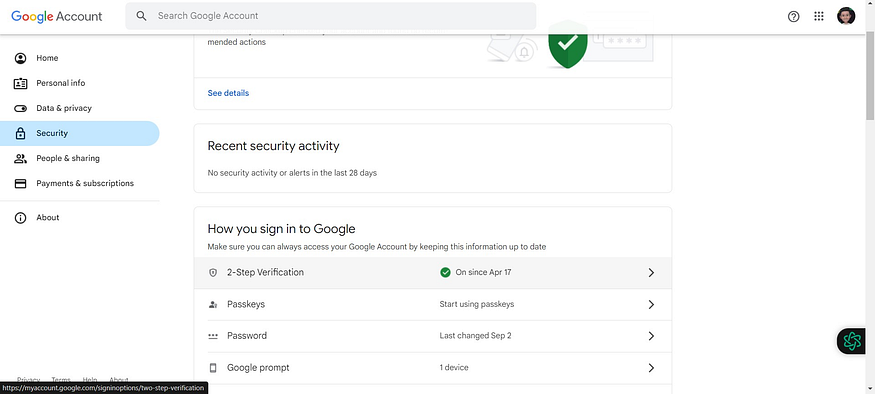

Now click on Security

Two-step verification should be enabled

If not, enable it

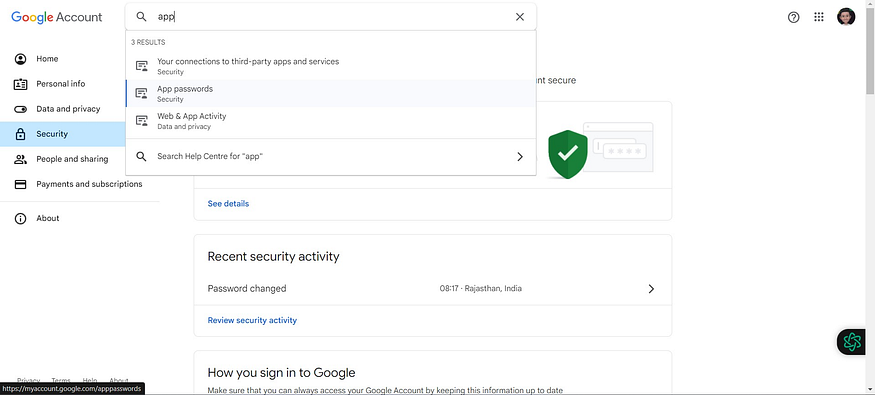

Now click on the search bar and search for the APP

Click on App passwords

It will ask for your Gmail password, provide it, and login

Now for the app name, you can use any name → create

You will get a password; copy it and save it for later use.

One-time watchable

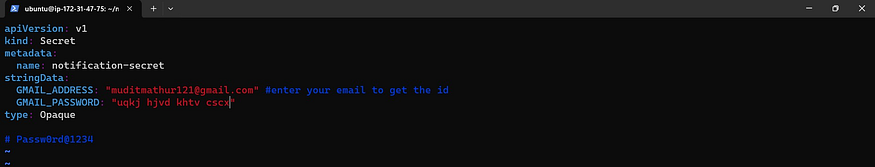

Come back to Putty and update the secret.yaml file for notification service microservice

cd ../..

cd notification-service

cd manifest

sudo vi secret.yaml

#change your email and password that were generated.

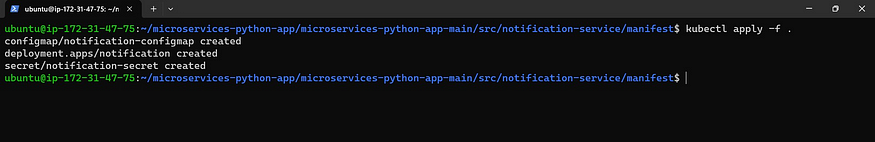

Now apply the manifest files

kubectl apply -f.

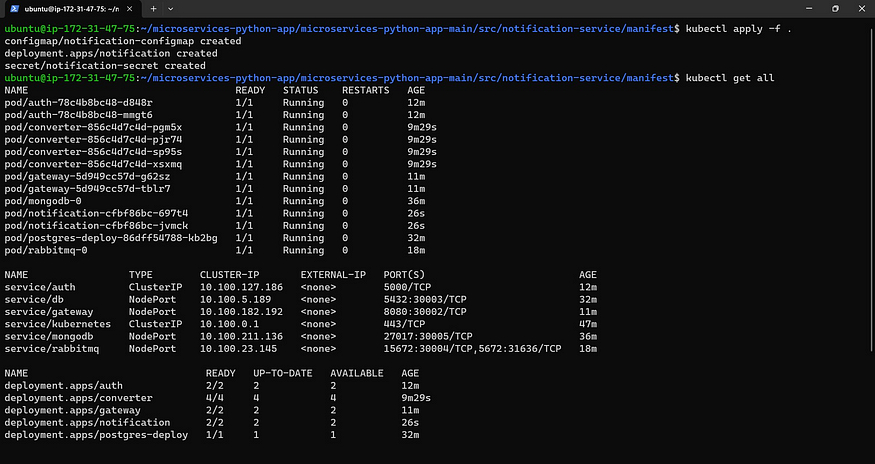

Check whether it created deployments or not.

kubectl get all

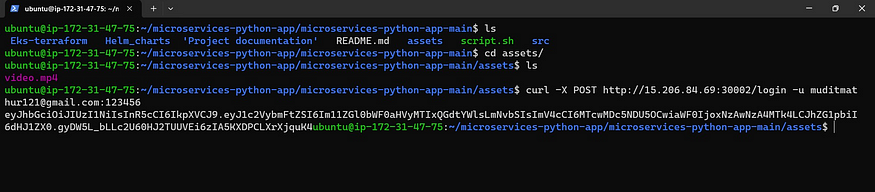

Now come to the assets directory

cd /home/ubuntu/microservice-python-app/microservice-python-app-main

cd assets

ls

curl -X POST http://nodeIP:30002/login -u <email>:<password>

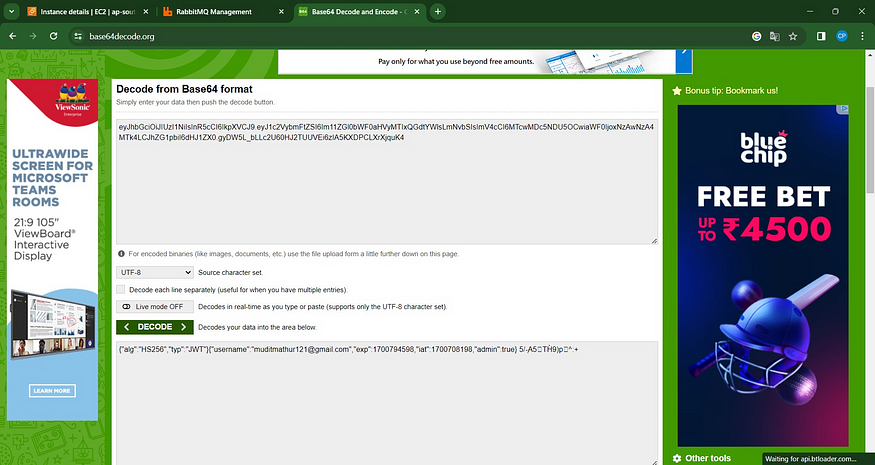

Copy the token and paste it inside a base 64 decoder you will get this

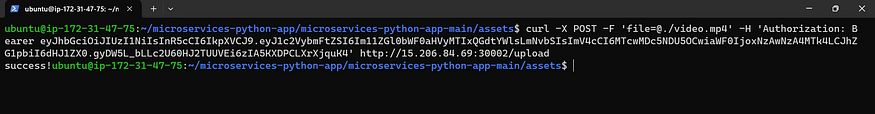

change JWT Token and node ec2 IP.

curl -X POST -F 'file=@./video.mp4' -H 'Authorization: Bearer <JWT Token>' http://nodeIP:30002/upload

It will send an ID to the mail

Let’s check the mail

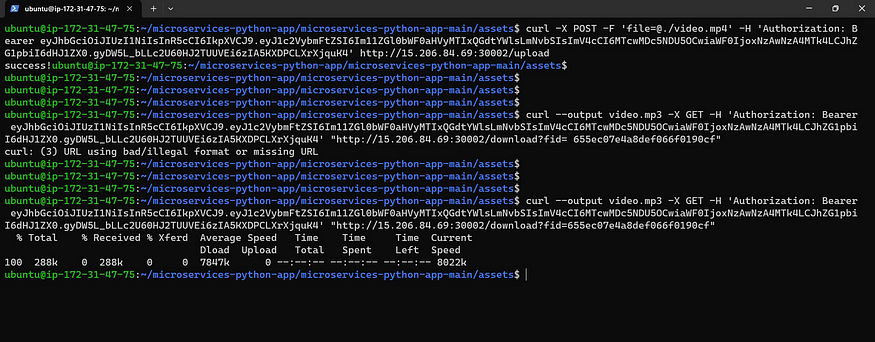

Copy that ID and paste it inside the below command with the JWT token and fid

curl --output video.mp3 -X GET -H 'Authorization: Bearer ' "http://nodeIP:30002/download?fid="

#change Bearer with JWT token

#nodeIp

#fid token at end

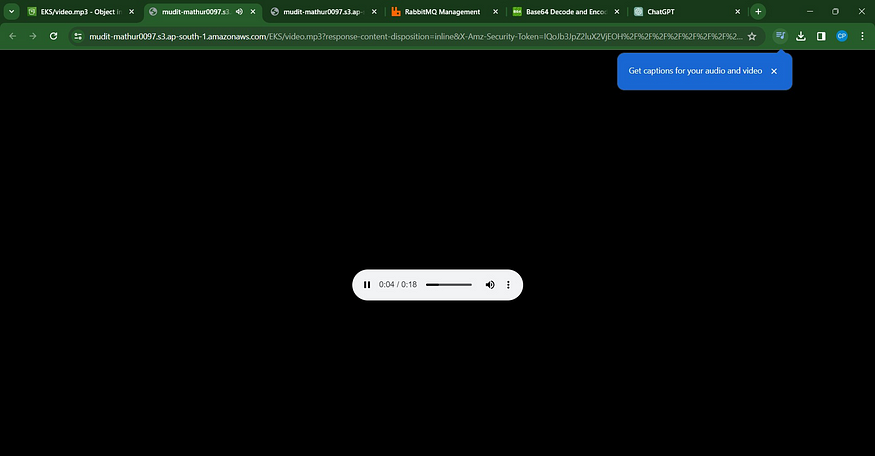

It will download an MP3 file

ls

You can see it’s created mp3 file and you can play it.

aws s3 cp your-file s3://your-bucket/your-prefix/

Arrryyy Elvish bhai ke aage koi bol sakta hai kyaa

Ehhhhhhh L-Wiiiish bhaiiieeeeee.......

Video.mp4

Video.mp3

You can copy it to the S3 bucket and download it, and you can listen to it.

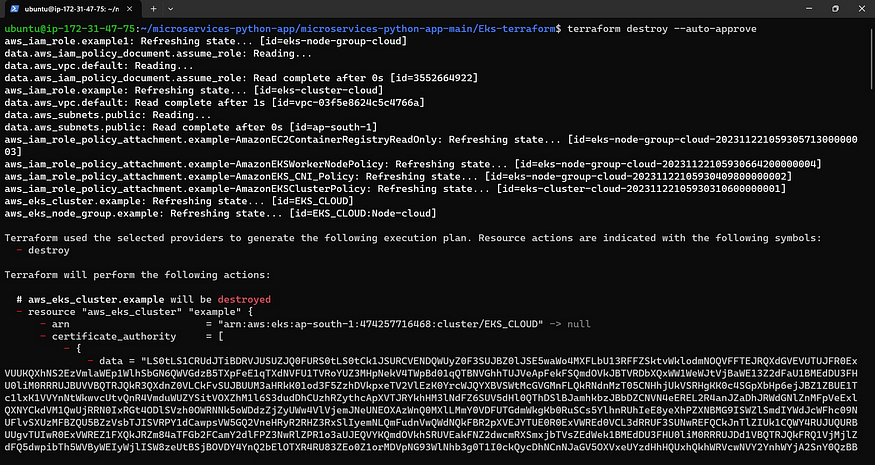

5. 💣 Destroy

Now go inside Eks-terraform directory

To delete eks cluster

terraform destroy --auto-approve

It will take 10 minutes to delete the cluster

Once that is done

Remove your Ec2 instance and Iam role.